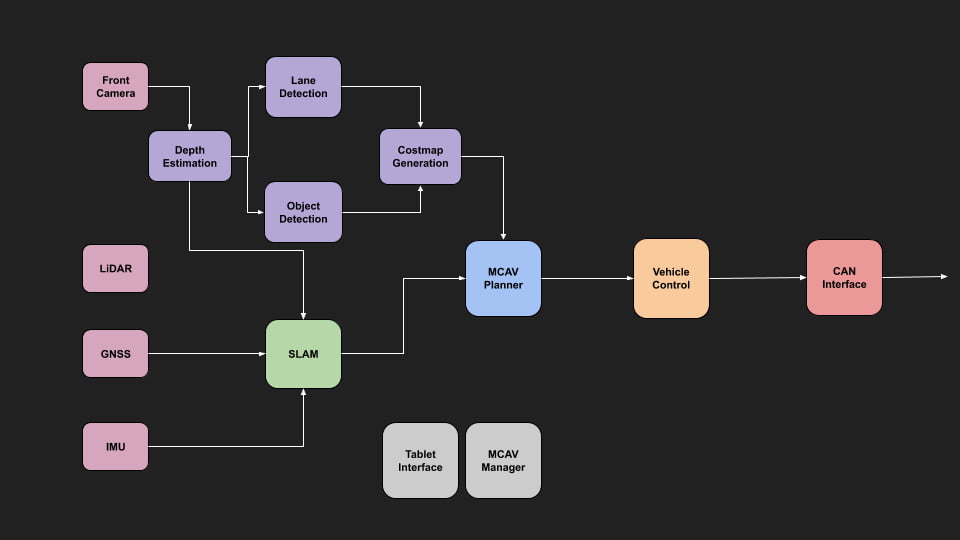

The software team has continued to develop the perception, planning and control systems for the vehicle. Our two major themes for this semester have been writing more of our own code, and reducing our dependence on predefined HD maps. These themes have pushed us to improve our software engineering practices, in order to meet these more computational complex tasks.

MCAV Software Overview

Team Projects

Our perception team has two major projects:

The first is lane detection, which uses a neural network to detect our driving lane (shaded in red), and a more general drivable area (shaded in blue). Previously we relied on lanes being predefined as part of the map. However, with this system, we can detect where the vehicle can drive in any situation, even when the road structure breaks down, such as unmarked areas, or car parks.

Lane Detection – Segmentation Model

Secondly, the object detection team has been working on improving the performance of our detection stack and training them to be more specific for autonomous driving.

Object Detection – Updated Training

Our planning team has been working on their own path planning algorithm based on hybrid A*, which is useful for navigating in highly unstructured, low-speed environments.

MCAV Planner

Our controls team has developed their own interface to the vehicle to read (and soon write) data from the CAN Bus. This gives us access to all the sensor data, computer states, and actuator controls.

CAN Interface Output

Final Year Projects

This semester we had two Final Year Project (FYP) students, Aryan Faghihi, and Yin Lee Ho.

Aryan worked on designing an end-to-end autonomous driving system. This system is designed to imitate the behaviour of a human driver using data from a single front camera. Using a neural network, he showed that we were able to get better performance by focusing training on where the model makes mistakes, rather than purely collecting more data (Active Learning).

End-to-End Autonomous Driving

Active Learning Experiments

Yin’s project focuses on using a neural network to estimate the distance of objects from a single monocular camera. This will then be used as part of a SLAM algorithm for vehicle localisation. The network will also be used by a number of different systems in the car, to replace the range data provided by our current LiDAR.

Original + Depth Estimation

Next Semester

Next semester we will be focusing on tightly integrating these systems together, as well as integrating with our simulations team so we can test in the simulator. This is in preparation for our end of year demonstration, which will be performed in the simulation this year.

In addition, we are developing a standalone lane-keeping assistance system which will be used to perform on-road testing.